2. July 2024 By Sebastian Lang

Snowflake and ML: Using data intelligently - Level Up your Data!

Snowflake was introduced and described by its founders in 2016 as an „Elastic Data Warehouse“ in the white paper of the same name. Today, eight years later, Snowflake is a comprehensive data platform that is predestined for numerous workloads, including data science and AI/ML. The first part of my blog post on Snowflake's AI capabilities focuses on the current capabilities for end-to-end machine learning on a single platform, based on enterprise-managed data. These capabilities can be used for custom and out-of-the-box workflows and include feature engineering, training APIs, model registration and more.

Figure 1: Overview of Snowflake ML, source: https://quickstarts.snowflake.com/guide/intro_to_machine_learning_with_snowpark_ml_for_python/img/d11e9b2521a7de62.png

What options does Snowflake offer?

ML functions

Snowflake offers ready-to-use analytics capabilities. These enable automatic predictions and insights into the data with the help of ML. Particularly suitable if you are not an ML expert:

- Time-Series Functions: this function trains an ML model with time-series data to determine how a particular value varies over time and relative to others. 'Forecasting' uses historical data to predict future values.

- 'Anomaly Detection' automatically identifies unexpected patterns or outliers

- 'Contribution Explorer' helps to find the values and factors that contribute most to changes in the time series data.

Classifications: No time series data is required for this function. This function sorts the rows into two or more classes based on their most meaningful characteristics.

Figure 2: How to train a model and create forecasts - in just a few lines of SQL, source: https://docs.snowflake.com/en/user-guide/ml-functions/forecasting

Custom ML

Figure 3: Python library and underlying infrastructure for ML workflows https://miro.medium.com/v2/resize:fit:1400/format:webp/1*EOHB2Z4OWzM6U93WhzKcow.png

For custom workflows, Snowflake provides APIs to support every stage of an end-to-end development and deployment process and includes the following key components: Snowpark ML Modelling, Model Registry and Feature Store.

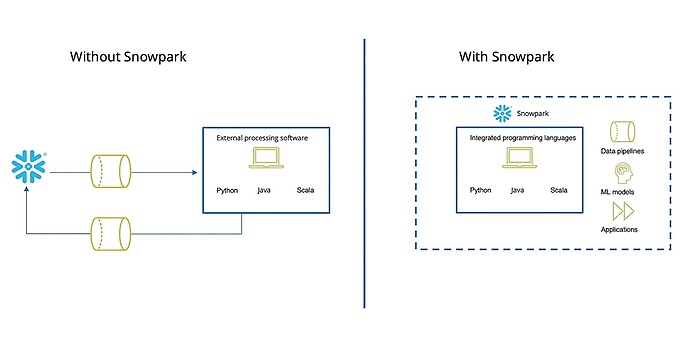

Figure 4: Data movement with and without snow park

Launched in 2021, Snowpark is an integrated development environment for extending Snowflake beyond SQL-based use cases. The environment provides support for Python, Java and Scala programming languages within the Snowflake Virtual Warehouse infrastructure. To get started with Snowflake ML, customers can use the Python APIs of the Snowflake ML library to access all operational functions:

Figure 5: Modelling process with the Snowpark ML Modelling API https://quickstarts.snowflake.com/guide/intro_to_machine_learning_with_snowpark_ml_for_python/img/8de45cc3c02b6206.png

- ML Modelling: With this interface, well-known Python frameworks such as scikit-learn, XGBoost and LightGBM can be used for data pre-processing, feature engineering and model training. Snowpark offers hyperparameter optimisation as standard.

Figure 6: Model management with the Snowflake Model Registry https://quickstarts.snowflake.com/guide/intro_to_machine_learning_with_snowpark_ml_for_python/img/39debc49af518225.png

- Model Registry: Enables secure management of models and their metadata as well as batch inference. Models are stored as schema-level objects and can therefore be easily used by others within the organisation and with Snowflake's role-based access control. The registry supports internally and externally trained models, multiple model versions and the selection of a default version.

- Feature Store: Feature engineering, where raw data is converted into features that can be used to train ML models, is a central part of the workflow. The store is used to create, store and manage features. It provides:

- a Python SDK for defining, registering, retrieving and managing features.

- a backend infrastructure with dynamic tables, arrays, views and tags for automated feature pipelines and secure governance.

The Python APIs of the Snowpark ML library can be downloaded and installed and used directly in Snowflake Notebooks as well as in any IDE, including Jupyter or Hex. Notebooks and all operations are executed using Snowflake's ML compute infrastructure. This includes the Virtual Warehouse Runtime for CPU processing and the Container Runtime for distributed CPU and GPU processing using the Snowpark Container Service (which is covered in the second part of the article).

Data platform for AI: added value for customers

With ML Functions, users in organisations can reduce development time by either using SQL functions directly or working via the new Snowflake Studio (Open Preview), an interactive interface without code. Customers can easily and securely develop and scale custom ML models and capabilities without data movement, silos or governance compromises. ML pipelines benefit from familiar Python tools and frameworks, Snowpark DataFrames, and UDFs and stored procedures that can be developed from any client IDE or notebook and run directly alongside the data in Snowflake. The combination of a powerful data platform with advanced ML capabilities makes Snowflake particularly attractive for data engineers and data scientists.

Looking to the future

A look into the future shows that Snowflake will continue to establish innovative ML and, more recently, generative AI functions. One highlight in this context is the annual Snowflake Summit. This year's summit was once again held in San Francisco at the beginning of June and provided a stage for numerous innovations. The no-code interface of the Snowflake Studio, the Feature Store and the Snowflake Notebooks were among the innovations presented. One innovation not yet mentioned is ML Lineage, which is currently available in a private preview. This feature enables the tracking of datasets, features and models over the entire end-to-end lifecycle.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.

Also interesting:

Data Mastery

Level Up your Data!

How you handle data today will determine your company's success tomorrow. It is the indispensable basis for generative AI, uncovers growth potential and strengthens the resilience of your company. An optimised data and analytics strategy is therefore not only beneficial, but essential. Want to know how to get the best out of your data? We'll show you.