3. December 2025 By Ellen Tötsch

More than just chatbots – document matching powered by Gemini

What use cases first come to mind when it comes to large language models (LLMs) such as Gemini, GPT and Claude? In most cases, the answer is likely to be chat applications. This is no coincidence, as it was ChatGPT that took the world by storm at the end of 2022 and changed the work of machine learning engineers forever.

However, if you primarily think about the roles LLMs can play in chat when considering use cases, you are limiting yourself unnecessarily. LLMs can not only chat, but also deliver structured results. Thanks to the output schema, you no longer have to ask nicely, but can enforce exact compliance with structural specifications.

Document matching is an exciting field of application for language models that goes beyond chat.

hagebau and the challenge of document matching

This potential was also at the centre of the considerations of the hagebau purchasing association. As a large, internationally active group, the company faces the daily challenge of optimising its processes without neglecting personal, customer-oriented advice. One of the biggest challenges was the time-consuming and error-prone manual comparison of orders and order confirmations – a classic case for the use of LLMs to increase efficiency and reduce the workload on employees.

When hagebau shareholders, such as the innovation-minded Bauwelt Delmes Heitmann, place orders, the data from their enterprise resource planning (ERP) system must be reconciled with the order confirmation. This is a tedious task that requires a great deal of patience and attention. As anyone who has ever built a house knows, even the smallest deviations in windows and doors are enough to result in a completely unusable product.

Reconciling orders and order confirmations has been very time-consuming for operational colleagues up to now. Despite great care, human error could not be completely avoided. The goal was to relieve the burden on employees and develop an intelligent tool that would make this time-consuming task much more effective. This allows teams to focus more on customers and use valuable time for personal consultation and support.

With its own AI department and a GPT model for internal applications, hagebau is continuously establishing new tools and is thus one of the pioneers in the industry. However, this experience in particular has shown that not every use case can be solved with on-board resources. When it came to the automated comparison of documents, it quickly became clear that additional technological support was needed. That is why hagebau, together with bauwelt Delmes Heitmann and adesso, developed an AI-supported solution that makes the process considerably more efficient.

A team of GenAI and UI engineers from adesso worked closely with hagebau and bauwelt to design a semi-automated document comparison system that runs natively in Google Cloud. The star of the show: Gemini 2.5 Flash.

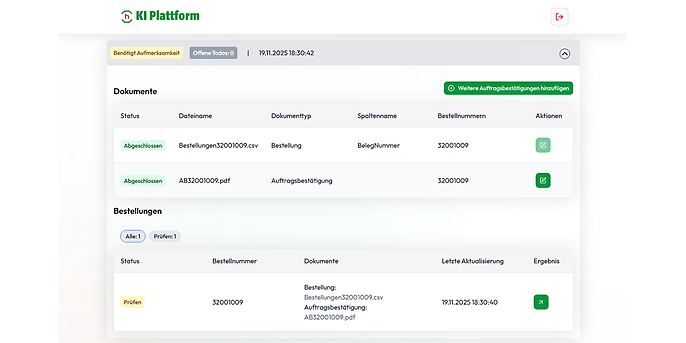

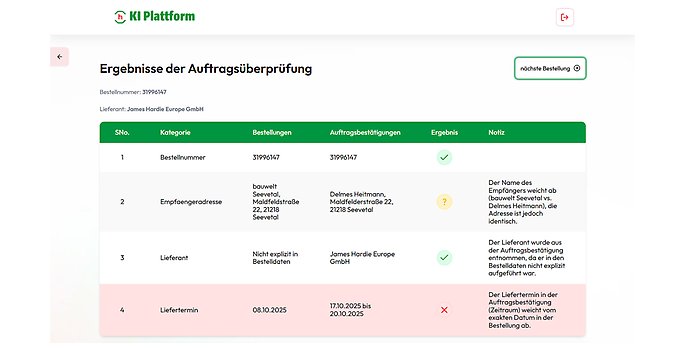

To give shareholders a quick overview of the relevant discrepancies between the documents, the results of the comparison are presented in a table. This is broken down by factors such as delivery address, item description or unit price. The first column contains the information from the order, the second column contains the information from the order confirmation.

An icon indicates whether the information matches, differs or is unclear. For example, if both documents contain the same delivery address, but one is addressed to the company's proper name and the other to its registered trade register name, users must decide whether there is a discrepancy here.

adesso & Google Cloud

Strong partners for your digital future

As a certified Premier Partner of Google Cloud, we combine more than 20 years of experience with state-of-the-art technology — from cloud migration to big data and AI to pioneering generative AI solutions. With over 1,100 projects implemented and a team of more than 100 cloud experts, we tailor your cloud journey to be secure and efficient.

Why this task absolutely required an LLM and could not be done using rules

To understand the reasons behind the decision to use an LLM-based solution, it is important to understand exactly how Gemini is used. The system prompt explains to the language model exactly what the task is and how it should proceed. The output schema enforces a response in JSON format, which the user interface requires for tabular display. No detail is omitted. Before its introduction, the results were already quite good, but only now is the comparison formatted so uniformly that users can quickly become accustomed to the structure. These two ‘blueprints’ guide users step by step through the documents to be compared, the steps involved in the comparison and the expected result. As is usual for this type of task, the model's temperature is set to 0, which is a parameter in LLMs that determines how much creativity and arbitrariness the model is allowed to contribute. Since particularly comparable results without imagination are required here, this degree of freedom is minimised to 0.

Unlike other solutions, the LLM recognises many deviations as purely semantic right out of the box. It not only recognises that things can be phrased differently and still mean the same thing, but even handles cases where the article is missing.

Die Benutzeroberfläche des KI-Dokumentenabgleichs: Gemini erkennt, dass mehrere Detailpositionen aus der CSV-Datei einer einzigen zusammengefassten Position im PDF entsprechen.

The document was split into several positions in another document. The brief and concise explanation of why it is (not) a deviation is also only possible in this form with LLM. Shareholders can also maintain their own glossary of abbreviations and synonyms. This helps the LLM understand the differences even better. They are recognised and assigned based on their tenant ID.

Gemini 2.5 Flash: A strategic decision for precision and efficiency

The choice of Gemini 2.5 Flash was no coincidence. Gemini 2.5 Flash performs excellently in optical character recognition (OCR) and can therefore read the content of documents well, even if they have not been optimised for machine processing or are handwritten. This means that the information does not first have to be extracted from the PDF by another model, which could result in context being lost. Furthermore, Gemini 2.5 Flash is a thinking model and can therefore consider a problem in several stages before giving its final answer. This is an important advantage in such complex comparisons, as it not only recognises patterns but also ‘understands’ the documents.

Gemini 2.5 Flash is also the market leader among LLMs in arithmetic. While other models still fail to add simple numbers, Gemini can independently calculate and compare total prices from unit prices, quantities and discounts. This is extremely helpful, as some documents specify the number of windows and the price per window, while others measure this value in terms of the total area of these windows and the price per square metre.

Technical implementation in the POC

The user interface is integrated into an existing hagebau platform that runs on-premises. This makes it the only part of the document comparison that is not implemented in a cloud-native manner. A service account authorises the front end to access the back end. This back end is hosted as a container in Google Cloud's fully managed container service, Google Cloud Run.

In our POC, an endpoint receives the two documents to be compared, the name of the shareholder and the order number. Based on this, all necessary information is extracted from the documents and the shareholder's glossary is loaded. The documents, the system prompt and the output schema are then transferred to the Gemini endpoint together with the glossary. The content is returned and used to fill the table, which is then displayed in the user interface.

Roadmap: New features in the MVP and possible future enhancements

The initial feedback from the testers at Bauwelt Delmes Heitmann has been consistently positive – their enthusiasm for the new solution is clearly evident. Document matching is currently in the final optimisation phase, in which the last details are being coordinated. The MVP (minimum viable product) will then be rolled out on a larger scale to make the advantages of the semi-automated solution available across the board. In doing so, adesso is in constant dialogue with its customers. In addition to technical hardening to prepare document matching for productive use, the roadmap primarily includes features that are intended to further simplify usage:

- Document number recognition: In the POC, order numbers always had to be entered manually. To speed up and simplify usage, order numbers will be read from the documents with Gemini in future. Only if no number can be found will users be asked to add it manually. Since the extraction of order numbers in the MVP is less complex and needs to be particularly fast, the even faster and cheaper Gemini 2.5 Flash-Lite is used here.

- Multiupload: Previously, only the documents for a single order could be uploaded. In the MVP, it is possible to process multiple orders at the same time. To do this, the documents are first uploaded to cloud storage. Firestore then records which orders already have data available, what is missing and what the status of the comparison is.

- Additional file formats: Originally, the document comparison could only process ERP exports in CSV format and order confirmations in PDF format. After consulting with the testers, it became clear that this was not sufficient. Therefore, additional file formats will be processable in the MVP.

- Improvement of the user interface: Thanks to the detailed feedback from the test phase, a number of areas were identified where the user interface could be made more intuitive and user-friendly.

Even after the implementation of the currently agreed MVP features, there are already plans for possible future features:

- Automated overnight data import: Fully automating data import by exporting order data to Google Cloud overnight would significantly reduce the effort required by users and increase the efficiency of document comparison.

- Individual thresholds: Users can already maintain their glossary in the proof of concept. In future, they will also be able to select individual limits, for example, when a price deviation is to be considered a discrepancy. This will increase the hit rate and reduce the time required by users.

- Access to past comparisons and documents: Another conceivable future enhancement is the ability to access a history of comparisons and the original documents.

This semi-automated document comparison solution shows that LLMs such as Gemini are capable of more than just chatbots and RAGs (retrieval augmented generation). Thanks to Gemini's precision in the areas of OCR, analytical thinking and calculation, adesso was able to develop a cloud-native solution that saves the customer a tremendous amount of work and simplifies knowledge transfer.

We support you!

Would you also like to automate your business processes with AI or use LLMs such as Gemini efficiently in your company? Then talk to our experts – adesso will accompany you from the initial idea to productive use.