5. January 2026 By Johannes Neubauer

Code-Execution oder Domain-Specific Language (DSL)?

Enterprise-ready Agentic AI requires a network and a safety net

For over 25 years, we at adesso have been developing enterprise applications, or more precisely, customized (information) systems that are closely aligned with our customers' value chains and core business processes.

We have not only experienced the shift from monoliths to modular systems and microservices, from application servers to containerization, from on-premises to cloud-native and serverless, from server-side rendering to single-page applications, and from native to hybrid apps, but have also actively shaped it. Separating promising technological developments from hype and determining the right time for each company to modernize is at the core of our consulting expertise.

One thing is always clear: hype comes and goes. Robust software architecture principles, however, remain and evolve in an evolutionary rather than revolutionary manner.

Market situation in the DACH region:

According to the latest Horváth study (July 2025), companies in Germany, Austria, and Switzerland have now moved beyond the hype and experimentation phase surrounding artificial intelligence (AI). Over 600 board members and executives from companies with annual revenues of more than €100 million were surveyed.

Instead of non-binding pilot projects and experiments, the focus is now on concrete, ambitious efficiency targets. Investments in AI are being used in a targeted manner to achieve measurable productivity gains and cost savings. Companies now expect AI solutions to make a clear, sustainable contribution to value creation and are no longer a playground for innovation projects. To achieve these ambitious goals, companies are prepared to invest 21 percent more in 2026 than in 2025 and expect efficiency gains of 16 percent across all areas.

The next wave is currently sweeping across the industry: Agentic AI, i.e., AI-supported agents that orchestrate tools autonomously. A variety of standards such as Model Context Protocol (MCP) and Agent-to-Agent-Communication (A2A) have been defined. Frameworks such as LangChain, LangGraph and Microsoft Autogen have emerged in the Python ecosystem, and LangChain4j, Spring AI and LangGraph4j in the Java ecosystem. In the low-code area, there are agentic workflow platforms such as n8n and br.ai.n. But as is so often the case, architectural sins are being committed.

An essential building block of this new world is Anthropic's MCP standard. Developers register their tools (APIs, databases, services) via a schema, and the Large Language Model (LLM) can call these tools “independently.” Technically, MCP is based entirely on classic technologies such as JSON-RPC 2.0 and Server Side Events (SSE). The mechanism is very powerful, but it results in the context being filled with a potentially large number of call definitions, tool descriptions, and intermediate results. This can lead to performance losses, high costs, declining response quality, and hallucinations. It also makes it more difficult to control what information is made available to the model in terms of compliance and data protection requirements.

In its blog article “Code Execution with MCP: Building more efficient agents” Anthropic suggested that instead of executing each tool call as a separate step and transferring all intermediate results to the model context, LLMs should be allowed to generate code. This combines multiple calls, filters raw data, and returns only the final result to the model context. This can achieve significant savings in token consumption. Anthropic speaks of 98 percent.

Cloudflare reports in “Code Mode: the better way to use MCP” that this concept brings significant efficiency gains and that MCP has been used “incorrectly” up to now. The authors observed that LLMs are significantly better at writing code that calls MCP tools than in the traditional method, where the model must be aware of every intermediate step in the context—including hundreds of thousands of tokens.

Simon Willison (board member of the Python Software Foundation) praised the approach as “a very sensible way to address the major drawbacks of classic MCP toolchains.” At the same time, however, many developers are raising security concerns: code execution offers a large attack surface, requires complex sandboxing, and is difficult to test statically. Posts on Hacker News and Reddit criticized MCP for making everything more complicated – and suggested using CLI tools or addressing REST APIs directly instead.

Future Software Development

Software that thinks ahead

Learn how intelligent software development works today and in the future. We'll show you how AI can be meaningfully integrated into modern engineering teams—not as a replacement, but as an effective reinforcement for developers.

We would never allow a human developer to deploy code into production without testing it first...

So we don't allow AI to do that either!

We propose a new solution that offers the same efficiency gains without allowing an LLM to execute mobile code directly in a production environment. We would never allow a human developer to do that either. Our approach relies on expression languages and a workflow DSL (domain-specific language).

We see this as part of our solution architecture for integrating GenAI components into custom developments. It elevates agentic systems to the enterprise level and is based on ten principles. Before we get into that, let's compare Anthropics' Code Mode proposal with our DSL Mode approach using the example from Anthropics' blog post.

Example: “Sales meeting notes to Salesforce” – Code Mode vs. Workflow DSL

Anthropics' proposal (and the discussion surrounding it) targets a very specific bottleneck: intermediate results should not make a round trip through the model context. Instead, multiple tool calls are combined “outside,” raw data is filtered there, and only a compact end result is returned to the model.

This efficiency idea can be implemented in two ways: generating and executing code – or creating and interpreting a workflow as a data structure.

Option A: Code execution (“code mode”)

The LLM generates code (e.g., Typescript) that calls tools, merges data, and reduces the result:

// generated by the LLM, executed in a sandbox

const doc = await gdrive.getDocument({ documentId: "abc123" });

await salesforce. updateRecord({

objectType: "SalesMeeting",

recordId: "00Q5f000001abcXYZ",

data: { Notes: doc.content }

}) ;

// only minimal result returns to the model

return { ok: true, updatedId: "00Q5f000001abcXYZ" };

The greatest strength of this approach lies in its expressiveness—almost any logic can be implemented with it. However, this creates an area of tension: even when sandboxing is used, the fundamental problems of code generation remain. This means that imports, external libraries, undesirable side effects, or even unexpected control flows can still occur if the sandbox is not perfect. Creating static guarantees is correspondingly complex, as the system must correctly restrict and secure every possible combination of programming language elements in case of doubt.

Variant B: Workflow DSL (“DSL Mode”)

Here, the LLM does not create executable code, but rather a workflow instance in a DSL. An interpreter executes the few DSL operations (tool call, references, projection, return). An expression language such as jq takes over the reduction of returns, so that intermediate results also do not make a round trip through the model context, but are condensed on the server side:

dsl: enterprise-workflow

version: "1.0"

workflow: sync_sales_meeting_notes

steps:

- call: gdrive.getDocument

in: {documentId: abc123}

as: doc

out: {jq: '{transcript:.content}'}

- call: salesforce.updateRecord

in:

objectType: SalesMeeting

recordId: 0005f000001abcXYZ

data: {Notes: {$ref: doc.transcript}}

as: sf

out: {jq: '{ok:-success, updatedId:. id}'}

return: {from: sf, jq: .}

In this example, we use YAML (internal DSL) as the target language. JSON or a custom language (external DSL) based on the syntax of Typescript or Python would also be possible. The choice depends in particular on the quality of the LLM's results for the respective syntax. It is important that validation takes place before execution and that an interpreter is used that can only implement the operations permitted by the DSL.

LLM tools must be treated at the user level at runtime. The same rules for access control and input validation apply as for any other user request.

We must bear in mind that LLMs are allowed to generate business logic that is executed directly in the production environment. We would never allow this in enterprise applications. Therefore, the tools made available to the LLM must be located at the user level at runtime, whereby the same access control mechanisms and input validations must be observed. In particular, this means no native admin functions (i.e., no god mode) and no direct access to databases. This also includes confirmation queries for calls that have a greater impact (e.g., deleting one or more business objects).

The key difference between the approaches is not so much whether YAML is used instead of Typescript or Python, for example, but rather the type of controllability. Each individual step is clearly structured and can be validated specifically. Before execution, the interpreter checks whether these are actually tool calls, whether the corresponding tools are permitted, whether the parameters passed are correct, whether data minimization is performed by jq, and whether size and timeout limits are adhered to. Import statements or arbitrary function calls, as are possible in classic programming languages, are not possible in the workflow DSL. If a problem occurs during validation, this can be communicated to the LLM and corrected via a bounded retry mechanism. This can significantly increase the quality of the results.

The execution space thus remains deliberately small and manageable. In contrast, this freedom must be restricted retrospectively at the technical level in code mode, which can be nearly impossible depending on the runtime, libraries, and language used. DSL mode leads to a “safety net” effect: the LLM creates a plan, and the runtime controls its execution – deterministically.

Tool Discovery: Less context, better selection – for both approaches

Both variants benefit when the tool selection is not made via complete schema descriptions in the model context, but via Tool Discovery:

- Directory approach (directory service): Tools are stored in a registry with a short profile (name, purpose, important fields, policies). The model only receives relevant entries “on demand.”

- RAG / semantic search: The model formulates intent and constraints. A vector search delivers suitable tools according to a defined strategy (e.g., top-k). Only then is the concrete plan generated as a DSL instance.

This reduces context load and costs, and the hallucination rate typically falls without losing dynamic tool orchestration via MCP.

Important

“Tool Discovery” here refers to the selection of specific tools (and, if necessary, reloading their schemas), not mechanisms such as the Docker MCP Catalog for finding MCP servers as container images or “Meta-MCP” as a pure gateway for aggregating multiple MCP servers. However, these building blocks can be combined so that a model can search for tools in a targeted manner without having to load all MCP schemas into the context in advance.

Interim conclusion

Anthropic's Code Mode proposal focuses on the LLM generating Typescript or Python to combine MCP tools, filter data, and only then load a result into the context window. This saves tokens and avoids the need for large amounts of data to be processed by the model.

At the same time, this increases the complexity of the sandbox: the code has access to libraries and must be securely and isolatedly restricted. An LLM-generated loop can unintentionally trigger a denial of service (DoS), exfiltrate data, or simply abort due to syntactic errors. Our proposed shift from “code is freedom” to “DSL is controlled expressiveness” in DSL Mode enables auditability, data minimization, and reproducibility—it makes Agentic AI efficient and enterprise-ready.

DSL Mode creates a clear security and compliance argument: efficiency by eliminating the round trip of intermediate results and control through a specifically configurable expressiveness that is validated before execution.

Expressiveness can be specifically configured with a workflow DSL:

- DSL as a lever: We can deliberately extend the DSL with additional language elements (e.g., if/else, switch, foreach, parallel, retry, compensation) or keep it lean. Expressiveness thus becomes an architectural decision – not a by-product of “we execute code.”

- Expression Language as a separate lever: jq can be restricted or alternatives such as JMESPath can be used. Formally, jq has the same expressiveness as a generic programming language – this opens up a lot of possibilities. That's exactly why it makes sense not to simply “unlock” jq completely, but to define permitted subsets (e.g., only pure projections/filters, no unbound constructs, size limits).

- Static verification before execution: Because the workflow is a data structure, these rules can be validated in advance and deterministically: permitted step types, permitted tools, maximum number of steps, reference graph without cycles, jq subset/rules, timeouts, output sizes, syntactic correctness, etc.

The lever for increasing efficiency is shared by the Code Mode and DSL Mode approaches:

- Tool discovery instead of “MCP server definition in context”

- Execution of multiple tools and logic in one step, without loading intermediate results into the context.

- Preparation and filtering of tool outputs before returning them to the model.

This makes AI agents more secure, more powerful, more reliable, and more affordable. But we're not stopping there.

MCP is not a panacea

Although the Model Context Protocol is a powerful mechanism, we believe that it is not the right solution for every problem. In some scenarios, it makes sense to generate GraphQL-Queriesdirectly and have them executed by deterministic business logic. A GraphQL endpoint delivers or modifies exactly the requested data in the desired structure and can be encapsulated similarly to an MCP tool. Access control can also be implemented. The key point is that we do not return the raw data to the LLM, or only return it in pseudonymized form. Although the LLM generates DSL steps and jq expressions or even GraphQL queries, it does not execute them itself. Instead, the orchestrator interprets these definitions deterministically, fetches the data from the backends, and processes it further. This ensures that no unwanted information flows to the LLM and that the sandbox problem is significantly reduced. The flexibility to use GraphQL directly does not mean abandoning our principles, but rather complements them when an MCP schema is insufficient or too rigid. GraphQL is also suitable for legacy applications, as a GraphQL server can be placed in front of any service as an access layer.

Ten enterprise principles for agentic systems

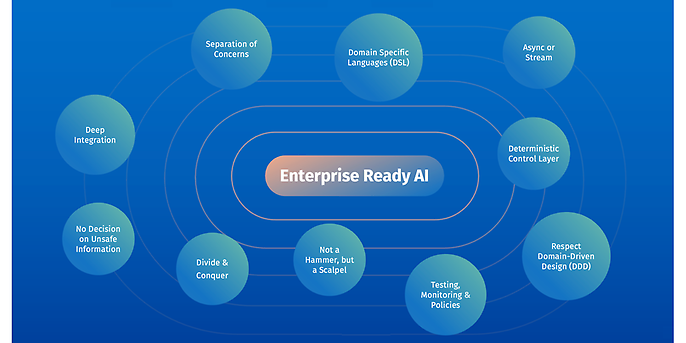

Beyond the choice between DSL mode and code mode, other principles are crucial for the meaningful integration of GenAI components into enterprise applications. We present these principles below.

10 Principles for Enterprise Ready Al

We have formulated ten basic architectural principles for integrating AI agents into business-critical applications. These principles are the result of our experience and serve as guidelines for architecture and operation:

- 1. Not a hammer, but a scalpel: AI is only used where it delivers clear added value. Standard functions remain implemented conventionally. For concurrency, data queries, transactions, access control, mathematical operations, error handling, messaging, templating, etc., we consistently use classic, deterministic mechanisms.

- 2. Divide and conquer: Tasks are broken down into small, clearly defined sub-steps with clear responsibilities. Each sub-step is given its own context and a suitable prompt and is routed to specialized AI services or agents. Independent sub-steps are executed concurrently, with limited parallelism. Results are validated and, if necessary, re-executed with backoff.

- 3. Separation of Concerns: GenAI components are implemented as separate services in microservice architectures or as modules in module libraries. They use metadata such as GraphQL schemas or OpenAPI definitions to generate queries. Retrieval and processing are performed in deterministic application logic as far as possible, and only the absolutely necessary, anonymized, or pseudonymized data is sent to the LLM.

- 4. Domain-Specific Languages (DSL): Instead of executing AI-generated code, we rely on metamodels and configurative DSLs. They are statically validatable, auditable, and interpreted by deterministic code. For data transformations, we use expression languages such as jq. This principle applies not only to MCP and tool calls, but also to the creation of automations, dynamic user interfaces, and other use cases. In particular, we do not generate or execute mobile code because it poses an unnecessary security risk. Instead, the LLM generates instances of the metamodels, which we strictly validate and then interpret in the traditional way. This makes them less generic, but increases control, traceability, and security.

- 5. Deterministic Control Layer: A deterministic workflow controls when which AI module or agent becomes active. We prefer clearly defined, agentic workflows instead of generic feedback loops. Classic, deterministic business logic decides on execution, validation, retries, and next steps. This allows it to maintain control.

- 6. Deep Integration: AI is specifically integrated deep into the application architecture. We only use low-code or scripting where it delivers added value. The more closely AI and business logic are intertwined, the greater the transfer effort between scripting and application. Low-code is fast at the beginning, but it does not scale well and becomes fragile, insecure, and difficult to maintain over time. That is why an orchestrator controls all calls and keeps the interaction controlled and traceable.

- 7. Testing and policies: Tests, metrics, filters, and safeguards are an integral part of every AI integration. Since AI is not deterministic, we test and stress-test the components and monitor their behavior via policies during operation. Inputs and outputs are filtered and secured at runtime. Where errors are unacceptable, AI is not used.

- 8. Async or stream: Longer processes are executed asynchronously or provided as a stream. In the case of direct interaction between the user and LLM, we provide a tightly timed status and results stream for synchronous calls to keep the dialogue flowing smoothly. Execution continues and is not interrupted just because the user leaves the app, page, or focus.

- 9. Avoid decisions based on uncertain information: The system asks for clarification when information is missing or provides partial results with clear indications of uncertainty. It should not make self-assured decisions based on invented assumptions.

- 10. Respect domain-driven design (DDD): DDD defines the technical communication and responsibility boundaries. These boundaries do not have to be identical to the microservice cuts. In multi-agent systems, however, DDD and bounded contexts are consistently used to determine when agents run within a service and when they run as separate services that communicate via A2A.

These principles provide clarity when we integrate new GenAI technologies such as LLMs. They help to avoid the dangers we know from previous technology cycles: untested orchestration, data overload, lack of expertise, and lack of governance.

Conclusion and outlook

The changes that AI and GenAI in particular will bring are affecting all areas and will certainly not stop at information systems. We see great opportunities and new possibilities in this, but also risks. That is why we need a solid foundation on which to build solutions for our customers' core business. Opportunities should be exploited while technologies are used with common sense. Different standards apply to use cases with high reliability and security requirements than to a simple chatbot. The variant and technology chosen depends on the specific business case – a core principle of adesso.

We are already applying these approaches to customers from a variety of industries – from media and entertainment to retail and manufacturing. We have carried our commitment to software excellence into the AI age and are ready to actively shape the changes that lie ahead.

We support you!

Our experts help you accelerate development cycles, reduce costs, and create competitive advantages with automated code generation, AI-supported error analysis, and smart processes.