1. July 2025 By Jan Skrovanek

Why switching to sovereign GenAI is easier than you think

What is behind the term ‘sovereign AI’?

Artificial intelligence (AI) is fundamentally changing our business world. But while companies want to tap into the potential of AI, there is also a growing awareness of the risks of external dependencies.

Many companies do not have their own expertise in key technologies such as AI. This makes them dependent on large technology companies, which can pose a strategic risk. On the other hand, there is a need to use powerful technology to keep pace with change. Modern large language models (LLMs) are very complex and costly to train and operate, meaning that a completely autonomous AI infrastructure is out of the question for most companies.

The term ‘sovereign AI’ describes the balance between these two extremes. It is about the ability of companies to use AI technologies in a self-determined, secure and controlled manner – without handing over critical business data or decision-making processes to third parties. The desire for alternatives is also evident in our GenAI Impact Report Germany 2025, where 71 percent of respondents say that it is important or very important to them that GenAI applications for their company are developed in Europe.

What many people misunderstand is that a shift towards sovereign AI does not mean a radical break, but rather a gradual transition to a new operating model. This is important wherever companies want or need to maintain their digital independence. However, sovereignty does not mean turning away from the major providers completely, as this is unrealistic. Rather, it is about the freedom to decide for yourself what your GenAI stack looks like and to remain flexible enough to be able to respond to geopolitical risks.

How best to get started?

To take the first step towards sovereign AI, a brief inventory of the components available in a GenAI stack should be made.

The switch to ‘sovereign solutions’ is often associated with a loss of performance. But is that justified? Or can sovereign AI be designed in such a way that it can match the performance of the leading providers?

For such an analysis, it must first be defined which components belong to a ‘GenAI solution’. This can be roughly divided into three areas:

- 1. Hardware & infrastructure

- 2. Language models

- 3. Software

In this blog post, I take a brief look at these three levels and answer the question: ‘Can sovereign solutions keep up with the performance of the market leaders?’

Hardware – buy, rent or both?

LLMs are known to require high computing capacities – both for training and inference. Specialised GPUs are best suited for this purpose, as their ability to perform parallel computing operations makes them particularly well optimised for running AI models.

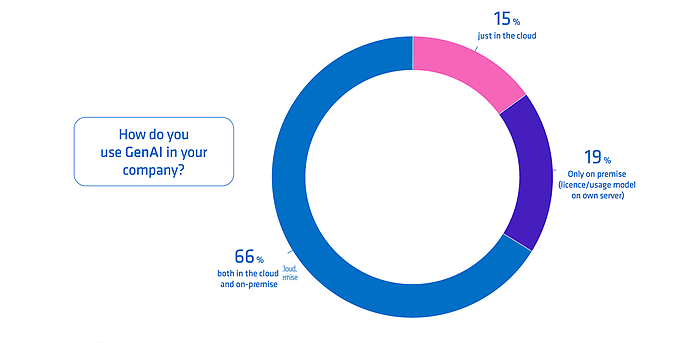

As part of our GenAI Impact Report Germany 2025, we asked companies where they host their GenAI applications. The results showed that 66 percent of companies have a hybrid approach, meaning they use both the cloud and their own on-premise infrastructure.

US hyperscalers are unrivalled when it comes to scaling and capacity. This year alone, Google, Microsoft and Amazon plan to invest around 250 billion US dollars in data centres. The sheer computing power of the major cloud providers is complemented by a software stack and ecosystem that has grown over many years. This makes cloud providers an attractive option for a wide range of businesses – and this is set to remain the case for the foreseeable future.

On the other hand, there is the expansion of on-premise capacities. Modern state-of-the-art GPUs are not cheap – a single graphics card of the latest ‘GB200’ type from NVIDIA's Blackwell series costs up to 70,000 US dollars. A single server rack consisting of 8 GPUs costs around half a million. Added to this are the costs of maintaining the infrastructure, which have a negative impact on the total cost of ownership (TCO). That's not to say that everyone who wants to use on-premise LLMs has to invest half a million in GPUs right away – some lean models are already running on end devices such as laptops. On-premise will always have a place, just like the cloud, especially when it comes to sensitive data that needs to be protected.

Sovereign cloud offerings provide a middle ground between the two options. These include European hyperscalers (such as StackIT and IONOS) as well as special offerings from US hyperscalers (such as AWS Sovereign Cloud and Google Sovereign Cloud). The range of functions and capacity are constantly increasing, making these providers a strong option for more workloads.

The question for the future is therefore: Which workloads can run in the cloud and which workloads are potentially better suited to on-premise environments or a sovereign cloud due to their sensitivity?

Overall, it can be said that hardware can still be a bottleneck in some areas. Even though the discussion is often presented as ‘cloud or on-premise,’ the reality is that most companies are already pursuing hybrid approaches. A pragmatic look at the AI workloads in the company, taking into account scaling requirements, functional requirements and data sensitivity, can help decide which workloads run in which operating model. TCO should also be taken into account, as hyperscalers can sometimes offer more attractive terms due to their economies of scale.

Generative AI with adesso: Innovation that works

Whether you need customised customer communications, automated processes or creative content creation, we show you how to use GenAI in a targeted manner to create real added value. With practical advice, industry-specific solutions and a clear focus on feasibility, we accompany you from the initial idea to successful implementation.

Focus on language models – can open source keep up with OpenAI and Co.?

LLMs are the ‘brain’ of every GenAI solution. These models are trained on huge amounts of data, equivalent to around 10 trillion words, such as training sets from websites (CommonCrawl, RefinedWeb), social media platforms (YouTube, Reddit) or data sets consisting of books and public databases. The enormous amounts of data are combined with various mechanisms to optimise language processing (e.g. transformer architectures and attention mechanisms). This makes LLMs a powerful tool in various application areas, such as chatbots, information extraction from documents or translations. So-called ‘multimodal’ LLMs are also capable of using different formats as input and output, such as audio, video and images.

A basic distinction can be made between proprietary and open-source models. While proprietary models (e.g. OpenAI GPT-o3 or Google Gemini 2.5 Pro) can only be used in the manufacturer's environment or via API, open-source models are freely available.

Over the last few years, the open-source community for AI models has developed rapidly. At the beginning of 2022, there were around 25,000 AI models available on Hugging Face (a platform for open-source AI models). Today, that number is over 1,800,000. Meta's Llama models alone have been downloaded more than a billion times.

Looking at common benchmarks, it seems that the best models come from closed systems. Gemini 2.5 Pro from Google and o3 from OpenAI dominate the benchmarks, but the lead of the leading AI labs is shrinking. While their lead still seemed unassailable in 2023 and 2024, providers of open-source models such as Meta, Mistral and Deepseek are now managing to develop models that match the performance of the market leaders by 95 percent. Six of the 20 leading models in the LMArena are now open source. The trend is clear: the lead is melting away, and innovative advances (such as reasoning, long context, and MoE architectures) can usually be found in open-source models after a few months.

In addition, the pure ‘intelligence’ of the model is not the only factor determining performance in a business context. Enriching the model with relevant, domain-specific data, fine-tuning, clever prompt engineering and intelligent integration of different models can also have a positive impact on performance.

In summary, open-source LLMs are now a valid alternative to closed systems and are perfectly adequate for a wide range of use cases.

The software stack

Once the necessary hardware is in place and the language models have been selected, one last piece of the puzzle is missing: the software to bring everything together and build a solution. The requirements for a software stack for GenAI solutions are extensive, especially for productive use. Some of the most important tools include:

- Data storage: Provision of data storage solutions such as vector databases or knowledge graphs.

- LLM observability: The more complex AI solutions become, the more important it is to have tools that can monitor and analyse their behaviour.

- LLM evaluation: Targeted methods for evaluating the performance of different configurations.

- LLM orchestration and routing: Building complex workflows and routing systems.

- Security tools: Protection against attacks and establishment of stable guardrails.

- Memory management: Optimised management of the solution's ‘memory’ to improve performance over time.

- Communication protocols: Standardised communication protocols, such as MCP or A2A.

- Use case-specific AI solutions: Specific solutions for a specific use case.

The decision for a software stack is closely related to the other two components. Hyperscalers offer their own solutions in their cloud environments (e.g. VertexAI in Google Cloud or Azure OpenAI from Microsoft). These tools offer a wide range of services but are only compatible with the provider's respective cloud environment.

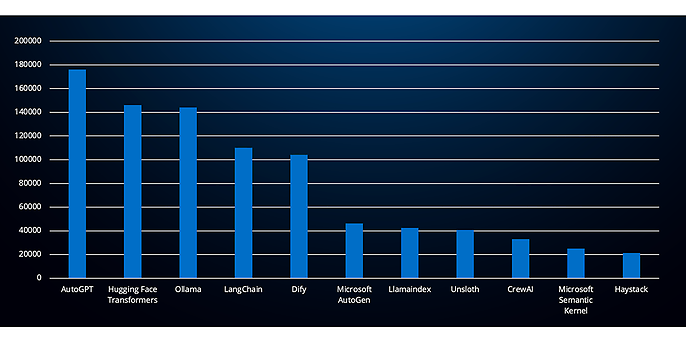

One development that greatly benefits sovereign solutions is the strong open-source community that has grown in the field of AI development tools. Tools such as LangChain, Ollama and Llamaindex are all available as open-source frameworks and form the basis for the implementation of GenAI solutions in many projects. Five open-source tools have even achieved more than 100,000 stars on GitHub, demonstrating their broad acceptance (AutoGPT, Hugging Face Transformers, Ollama, LangChain, Dify).

Open Source Frameworks nach GitHub Stars

A potential disadvantage of open-source solutions compared to closed systems is the lack of enterprise functionality, especially when it comes to issues such as scaling and security. In addition, the options for managed services and support are significantly more limited.

Overall, it can be said that open-source frameworks have a firm place in the development layer of GenAI solutions and that there are already many established providers that are in productive use. However, investment in development expertise is urgently needed to meet the increased security and scaling requirements of enterprise architectures. Experienced developers can leverage the independence and flexibility of open-source solutions and transfer them to a secure, sovereign software stack. This allows you to build a sovereign software stack that is highly competitive and designed for use in an enterprise context. Ultimately, it all comes down to human expertise.

Conclusion on competitiveness

In summary, it can be said that sovereign approaches benefit greatly from the open source movement in the field of AI. LLMs and software are now available as highly capable open source components. Developments over the last 12 months also show that the gap between open source and closed systems is narrowing or disappearing altogether.

This means that you can be sure that an open-source stack will keep you future-proof in the areas of software and language models.

Hardware must be considered separately. The range of sovereign cloud solutions is growing rapidly and will gain in capacity and performance in the coming years. Nevertheless, US hyperscalers are still the benchmark when it comes to workload scalability. It is therefore important to carefully consider which processes will remain with hyperscalers in the foreseeable future and which (due to lower volumes or higher privacy requirements) belong in a sovereign cloud or on-premise instance.

What should companies do now?

The competitiveness of sovereign solutions is generally underestimated – but what does this mean for companies and what should be done now?

- 1. A detailed analysis of your own GenAI stack should be carried out. The focus here should be on reviewing the use of sovereign and open-source components.

- 2. Existing and planned AI workloads should be assigned to the various hosting options (hyperscaler, sovereign cloud, on-premise) based on their requirements.

adesso supports you on your path to sovereign AI. With over 100 successfully completed GenAI projects, we have the necessary expertise and know what it takes to get GenAI off the ground.

We support you!

Are you ready to take the next step towards sovereign AI? Then get in touch with us if you are interested in a GenAI Readiness Review or an implementation offer. Our experts are here to support you.