12. May 2023 By Chris Thurau

Trust in AI – how we can trust AI with a human life

Large language models (LLMs) such as Aleph Alpha or OpenAI models are shaking up the world right now. The public release of ChatGPT at the end of November 2022 saw the topic of artificial intelligence (AI) quickly jump back into the public spotlight. While some people fear for their jobs, others are relishing the active support it provides, be it at work or in everyday life. While AI models can make our lives easier, they also present us with new challenges. Teachers can now get help with creating new lesson plans, but they also have to think about how to deal with this ‘new’ technology when pupils use it. However, not only can we use LLMs to gather information, but we can also use them to make decisions for us and ask them for help. But how reliable is this technology and would we trust it with a human life?

An introduction to the experiment and the research group

This is one of the questions I tackled in my bachelor’s dissertation. It involved experts selecting one of four interaction levels (IL) and how their decision differs from that of the layperson. Each IL represented a certain level of support that the AI provided to the users.

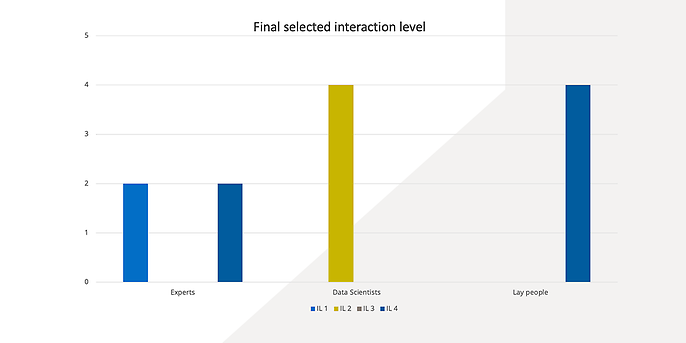

While IL 1 was only a rudimentary level of support in the form of a source search, IL 2 was a Q&A function that could answer all the necessary questions on CPR. These ranged from ’when do I start performing CPR on someone?’ to ‘which song will help me keep in rhythm while performing chest compressions?’. IL 3 provided a summary of the source, and IL 4 was an automated version of the process. The choice that the users ultimately made also revealed which level of interaction each subject trusted the most. Performing CPR on humans was chosen as the topic, as most people have a rough idea of the process, but it is also incredibly important.

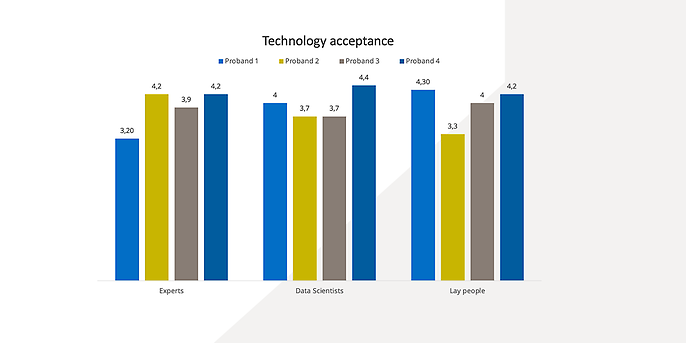

12 people were interviewed in total. Four of them had a medical background and were the experts. Four other people had specialist knowledge in the field of data science, and the remaining four were laypersons without any points of contact in either subject area. After the interview, the participants also filled out a questionnaire on technology acceptance in order to exclude any possible bias in the results.

Preparing the AI

In the next step, an interface was developed with Streamlit and connected to the API of Aleph Alpha’s Luminous language model so the test subjects could interact with the AI. The model was then given a text that contained all the information needed to perform CPR on a human as a source. The AI did not need to be trained separately, as the model can understand natural language and draw its own conclusions from the given text. After that, prompt engineering influenced Luminous to the extent that the desired outputs were short and concise and fitted the subject area of medicine. A few-shot prompt was suitable for this. The prompt mapped two medical texts and provided a sample question and answer from each text in order to teach the model the correct syntax.

The differences between the experts, data scientists and laypersons

What were the results of the study? The subjects’ acceptance of the technology ranged from 3.2 to 4.4, with 0 being the lowest and 5 being the highest. On average, the data scientists and laypersons were slightly more willing to engage with technology than the experts. This score was between 3.95 and 3.88.

This distribution was also reflected in which IL was chosen. While all the laypersons opted for IL 4 – the automated performance of CPR on humans – the data scientists chose IL 2. However, the experts were not so unanimous in their choice. Two of these test subjects chose IL 1 and two chose IL 4.

But what do the results tell us and how should they be interpreted? We will start with the laypersons. They chose the highest IL, as they had the least experience in the field and needed the most support. In addition, this also gave them the chance to hand over responsibility in the event of complications, meaning they no longer had to fear legal repercussions. The data scientists, however, did not want to give up the helm completely because of their experience with AI, as problems can always arise with technology. But the voice assistant gave them a feeling of security thanks to the tips and explanations it can provide while performing CPR. You could actually therefore assume that the experts, with their high level of experience, should choose the safest option and would unanimously opt for IL 1. The two experts who opted for automation, however, justified their choice as follows: the mental and physical strain while performing CPR would be reduced and the device could reach the patient even faster than the ambulance service. In addition, this could also lower the inhibition threshold of laypersons to perform CPR and the error rate would be reduced. However, this did not mean that they trusted the device more than their own abilities, as it would probably not achieve a level of medical-grade precision. Furthermore, this statement was also backed up by the requirement for a long test phase and medical certification so that the machine could be relied on more readily. As can be seen in the figure on technology acceptance, these two professionals also had a high score and were therefore probably more likely to engage with the technology.

Are prompt engineering and trustworthy AI the key to success?

What can we do with these findings now? This study shows us that trust in a machine is not unconditional, but that we would still trust it with a human life under certain circumstances. This is why technical development goes hand in hand with the trust we place in it so that it is ultimately accepted by the end customer. Using trustworthy AI and the targeted influencing of these models through prompt engineering will therefore be inevitable in the future. An example of this is MetaAI’s AI Galactica, which, due to its overwhelming amount of knowledge, mixed up the true facts, thus causing it to make up its own facts and begin to ‘hallucinate’. Furthermore, the technology behind it also shows us how much we can now achieve using a large language model such as Luminous without much effort. An expensive and complex training process is not always necessary to achieve certain goals.

You will find more exciting topics from the adesso world in our latest blog posts.