26. December 2023 By Mykola Zubok

Data Evolution Chronicles: Tracing the Path from Warehouse to Lakehouse Architecture - Part 1

Before computers became ubiquitous, companies relied on ledgers, inventory lists and a healthy dose of intuition, along with other limited, manual methods, to track and analyse their metrics. In the late 1980s, however, the concept of a data warehouse emerged, primarily for the purpose of producing static reports. With the introduction of data warehouses, companies began to move from making decisions based on intuition to a data-driven approach.

The constant advancement of technology has further accelerated the digitalisation of businesses and brought new challenges to the field of data analysis. The well-known 3Vs - volume, velocity and veracity - have become central to defining the concept of big data. Big data has posed a formidable challenge to the established, traditional architectures of relational data warehouses. Organisations have had to adapt to analysing not only structured tables, but also semi-structured and unstructured data, giving rise to the concept of data lakes.

As a result of these evolving challenges, the architecture and concepts of enterprise analytic systems have changed. In the first part of my blog post, we will explore their evolution, the reasons for their emergence, and the problems they have addressed.

Data Warehouse

Let's look at a situation where we need to move to data-driven solutions. We already have various systems in place for sales, production, supply chain and more. The first question you might ask is: why can't I go directly into a production database, such as the one containing sales data, and generate a report? While this approach may work, consider the following scenarios. You may need to compare data from multiple systems, not just the sales system. Or you might want to analyse historical data that doesn't live in the production system for more than, say, 30 days. There are many such scenarios. This is where a data warehouse comes in. The definition of a data warehouse could be expressed as follows:

A data warehouse is a repository that stores data from multiple sources for historical and trending reporting. It acts as a centralised hub for different subject areas and contains the single version of the truth.

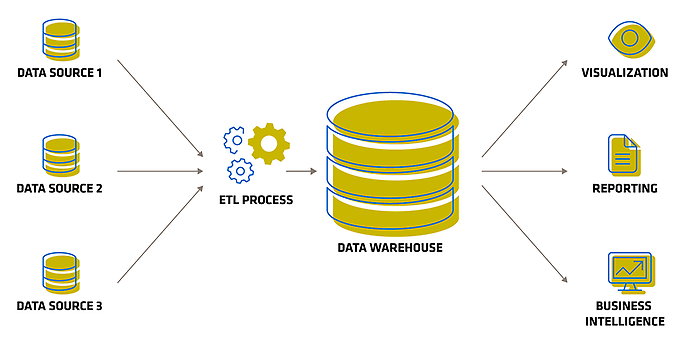

A Data Warehouse architecture

The data lifecycle typically consists of the following steps:

- 1. Data extraction: The process begins with the extraction of data from various source systems such as transactional databases, flat files, spreadsheets and external data sources.

- 2. Data transformation: Once the data has been extracted, it undergoes a transformation process to convert, cleanse and integrate it into a consistent format suitable for analysis.

- 3. Data loading: The transformed data is then loaded into the data warehouse.

- 4. Data storage: Data in a data warehouse is typically stored in a structured format optimised for query and analysis, such as a star or snowflake schema. The storage architecture is designed to support fast and efficient retrieval of data for reporting and analysis.

- 5. Metadata management: Metadata, which provides information about the data, is critical in a data warehouse environment. It includes details about data sources, transformation rules, data lineage and other information, helping users to understand and trust the data.

- 6. Business Intelligence and Reporting: The final step is to use business intelligence tools and reporting applications to analyse the data and gain insights. Users can create dashboards, visualisations and reports to support decision making within the organisation.

Which challenges solves data warehouse?

The benefits of a data warehouse are numerous and can have a significant impact on an organisation's data management, analysis and decision-making processes. Let's describe each of the benefits listed:

- 1. Reduce the load on the production system: By offloading analytical and reporting workloads to the data warehouse, you prevent resource-intensive queries from impacting production systems. This separation ensures that core business operations remain responsive and stable.

- 2. Optimised for Read Access, Sequential Disk Scans: Data warehouses are designed for efficient read access, which is critical for running complex analytical queries. Sequential disk scans improve query performance and enable faster data retrieval.

- 3. Integrate multiple data sources: Data warehouses consolidate data from multiple sources, including disparate databases, spreadsheets, and external data feeds, providing a single view of an organisation's data assets.

- 4. Preserve historical records: Data warehouses store historical data, eliminating the need to keep paper reports for reference. This historical data is readily available for trend analysis and historical reporting.

- 5. Restructure/rename tables and fields, model data: Data warehouses allow for data modelling and transformation, enabling organisations to structure data to meet analytical needs without impacting the source systems.

- 6. Protect against source system upgrades: Data warehouses act as a buffer between source systems and analytical tools. This isolation protects against disruptions caused by source system upgrades or changes.

- 7. Improve data quality and fill gaps in source systems: Data warehouses often include data cleansing and validation processes that improve data quality and fill gaps in source system data.

- 8. Single version of the truth: Data warehouses provide a single, consistent source of truth for organisational data. This eliminates the discrepancies and inconsistencies that can arise from using multiple data sources.

The introduction of data warehouses and data-driven solutions has significantly improved business efficiency. Over time, however, the limitations of data warehouses, such as their focus on tabular data, began to impede business progress. It became increasingly clear that new solutions were needed.

Data Lake

We've talked about data warehouses, which primarily handle structured data with well-defined schemas. As the cost of disk storage has fallen over time, the ability to store ever larger volumes of data has become a reality. This data is often less structured, ranging from audio and video to unstructured text documents.In simple terms, a data lake is just a data folder in cheap storage where you can store any data.

Data warehouse systems have proven to be less than ideal for managing large volumes of data or data with different structures. This is where the concept of a data lake came into play, providing a solution for storing all types of data in systems such as Hadoop Distributed File System (HDFS) and later AWS S3.

A data lake is a centralised repository that enables organisations to store and manage large volumes of structured, semi-structured and unstructured data at scale. Unlike traditional data warehouses, data lakes store data in its raw, native format without the need for a predefined schema. This flexibility enables organisations to cost-effectively collect and store large volumes of data from disparate sources, including log files, sensor data, social media and more.

Traditional data warehouses were monolithic, on-premises systems where computing and storage were tightly integrated. The era of big data brought with it distributed computing, where data was spread across multiple machines, but each machine still had a combination of compute and storage.

With the advent of cloud computing, there was a paradigm shift that allowed compute and storage to be separated on different machines. This architectural change proved to be much more efficient. In addition, in a cloud-based environment, the pay-as-you-go (OPEX) model became more favourable compared to the traditional capital expenditure (CAPEX) model, which involved up-front, often inflexible investments.

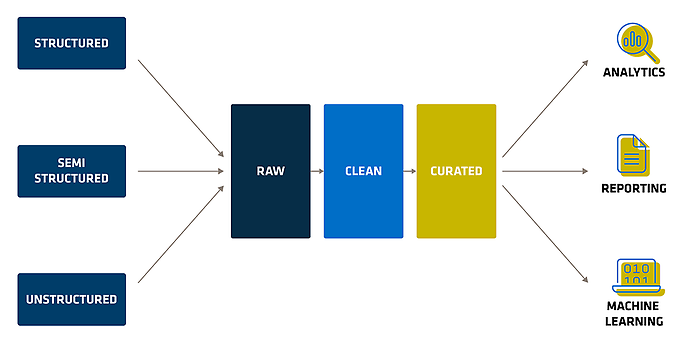

A classic data lake might look like this:

Visualization of a Data Lake

As can be seen, various data types are ingested into the raw data layer as they are, in their unmodified form. This data then undergoes a cleansing process in the clean data layer and is transformed to suit specific use cases. It's important to note that rather than referring to these layers as raw, clean and curated, different people or organisations use different terminology, such as

- Bronze, Silver, Gold

- Transitory, raw, trusted, refined

- Landing, raw, standardised, curated

- Raw, tiered, analytics

These alternative terminologies reflect the diversity and flexibility in structuring data management processes within the data ecosystem.

While the flexibility of a data lake is advantageous in many ways, it also has certain disadvantages.

- 1. Data governance: Ensuring data governance and maintaining data quality within data lakes can be a daunting task due to the initial lack of structure. Organisations need to establish robust governance practices.

- 2. Complexity: As the volume of data within data lakes grows, they can become increasingly complex to manage and navigate, potentially creating what is commonly referred to as a data swamp.

- 3. Security: Inadequate protection of data lakes can lead to security concerns, including unauthorised access and potential data breaches.

- 4. Latency: Achieving real-time data access and processing within data lakes can be more challenging than traditional data warehousing solutions.

- 5. Data swamp: This broad flexibility often results in the creation of a data dumping ground, a phenomenon often referred to as a data swamp.

Data lakes were initially hailed as a panacea for problems with relational data warehouses, promising cost savings, scalability and performance improvements. Companies such as Cloudera and Hortonworks marketed them as a one-size-fits-all solution. However, the reality proved challenging as querying data lakes required advanced skills. End users struggled with tools like Hive and Python, leading to failed attempts to replace traditional data warehouses with these supposedly magical solutions.

Data lakes provide a flexible and scalable solution for storing and analysing diverse and large data sets. However, to maximise the benefits and overcome the challenges of data lakes, organisations must carefully strategise and implement robust data governance and security measures. The choice between a data lake and a data warehouse depends on an organisation's specific data requirements and use cases, and in many cases a hybrid approach that incorporates both can prove beneficial. This hybrid approach will be briefly discussed in the second part of this blog post.

You can find more exciting topics from the adesso world in our blog articles published so far.

Also interesting: