17. November 2025 By Johannes Dienst

Continuous Deplyoment and GitOps Flux CI

Chaos in Kubernetes due to manual changes and configuration deviations? Flux CI and GitOps principles bring order: In this blog post, I'll show you how the open-source solution automatically synchronises production environments and makes continuous delivery reliable.

The performance and flexibility of a Kubernetes architecture can easily be compromised in operation by the chaos of configuration deviations and a manually modified infrastructure. Especially in regulated industries, such as banking, it is crucial that compliance with regulations and standards is maintained. So how can you ensure that the state of the production cluster does not deviate imperceptibly from the desired template, so that reliable continuous delivery (CD) does not become a gamble? An effective solution is to automate infrastructure using GitOps principles, where Git acts as the single source of truth. This is exactly the problem that Flux CI solves: It is the open solution for Kubernetes that works within the cluster, continuously retrieves the configuration from Git repositories and automatically synchronises the environment. Flux CI thus enables robust management for application deployments as well as for infrastructure. This is a decisive advantage, especially for banks that are dependent on compliance and regulation.

What is Flux CI?

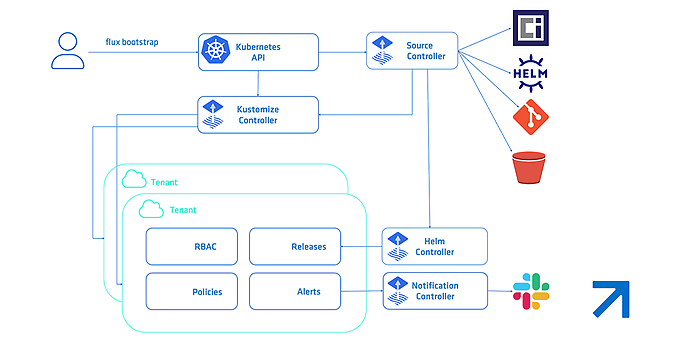

Flux is an open-source continuous delivery solution developed specifically for Kubernetes. At its core, Flux ensures that the state of the Kubernetes cluster remains permanently synchronised with the defined configuration sources. These are mainly Git, OCI and Helm repositories as well as Flux customisations, but also simple Kubernetes resources. Flux manages and deploys these resources and offers GitOps functions for both infrastructure configuration and application workloads. Flux uses the native API interface of Kubernetes and integrates seamlessly with other central components of the ecosystem. It supports multi-tenancy and the synchronisation of multiple Git repositories simultaneously.

Flux uses a set of Kubernetes API extensions known as Custom Resources (CRD – Custom Resource Definition in Flux). These define how external configuration sources – such as Git repositories – are applied to the cluster. For example, a GitRepository object is used to mirror the configuration from a Git repository as a so-called source. In addition, a subsequent Flux Kustomisation object (this is a CRD and should not be confused with Kustomize) is used to synchronise this configuration with the cluster. This systematic approach enables Flux to implement application deployment (CD) and progressive delivery (PD) through fully automated reconciliation.

What makes Flux unique?

Flux enables seamless continuous delivery by strictly implementing GitOps principles: Git as the single source of truth. The solution focuses on automatic synchronisation. Instead of relying on external CI pipelines that push changes to the cluster (which requires granting write access to external systems and managing credentials), Flux pulls configurations directly from Git. Configuration changes (manifests, Flux Kustomize files, or Helm definitions) are pushed to a Git repository, and Flux takes care of the rest. The core components of Flux – the Source Controller, Kustomize Controller, and Helm Controller – run within the cluster, continuously monitor the specified Git repository for new commits, pull the data, and apply the desired state using server-side apply.

A sample project: Multi-environment with external application repositories

The sample project we are creating demonstrates a Flux setup for multiple environments. You can find the finished example on GitHub. The structure of the Git repository separates the management of the cluster setup (infrastructure) from the deployment of applications. Two separate environments are defined: staging and production, along with all the necessary infrastructure components. Specifically, the example deploys the Podinfo application and contains the configuration required to install ingress-nginx as the central cluster service. The root kustomisation of our cluster configuration, clusters/my-cluster/kustomisation.yaml, orchestrates the entire deployment by merging the Flux system manifests, namespaces, infrastructure components, RBAC definitions, and finally the application folder. This setup ensures that dependencies are managed effectively so that critical resources such as namespaces and the ingress controller are available before applications that depend on them are deployed.

├── apps/

│ ├── kustomization.yaml

│ └── podinfo/

│ ├── overlays/

│ │ ├── production/

│ │ └── staging/

│ └── sources/

│ └── flux-system/

└── clusters/

└── my-cluster/

├── kustomization.yaml

├── flux-system/

├── infrastructure/

├── namespaces/

└── rbac/

Preparations

To replicate a Flux setup like the one in this example locally, you will need the following:

- a local Kubernetes cluster (Kubernetes kind recommended),

- the locally installed Flux CLI, which is essential for bootstrapping and interacting with Flux,

- a Git repository hosted on GitHub as a source for configuration, and

- a personal Git access token with repository permissions, ideally exported as an environment variable (here: GITHUB_TOKEN, GITHUB_USER).

First: Bootstrap Flux

Bootstrapping is the first step in using Flux and the officially supported method of installation. It installs Flux on a Kubernetes cluster so that Flux itself is managed by GitOps.

The following command creates a personal Git repository with the Flux system resources on GitHub and installs the Flux system in the Kubernetes cluster.

CODE BOX START

export GITHUB_TOKEN=<Your GitHub Token>

export GITHUB_USER=<Your GitHub Username>

flux bootstrap github \

--owner=$GITHUB_USER \

--repository=<your repository name> \

--branch=main \

--path=./clusters/my-cluster \

--personal

CODE BOX END

When you run the flux bootstrap command, several steps are performed:

- 1. Flux generates and pushes the core Flux component manifests (Source Controller, Kustomize Controller, etc.) to the specified Git repository.

- 2. It deploys these components and the required Custom Resource Definitions (CRDs) to the Kubernetes cluster.

- 3. Flux then creates its own synchronisation resources: a GitRepository resource that references the configuration repository and a Kustomisation resource (visible in our example as flux-system Kustomisation) that is configured to monitor the path to the flux-system configuration within the repository.

This means that the entire continuous delivery system – i.e. Flux itself – is defined, managed and versioned in Git. If Flux settings need to be changed, a commit in Git is all it takes, and Flux synchronises its own state accordingly.

AI automation

Routine becomes intelligence

Automation is more than just technology: it is the key to agility, efficiency and quality. We support you in fundamentally rethinking your processes. This eliminates routine tasks, turns data into a driver of decisions, and merges IT and business processes into strategic value chains.

Discover use cases and tools such as DORA.KI or RPA and learn how your company can benefit from intelligent automation.

Setting up the infrastructure

The infrastructure is managed under the path clusters/my-cluster. This ensures that these components are set up before applications are deployed.

1. Namespaces

The basic step is to create the logical boundaries. Define the namespaces staging, production, and ingress-nginx using standard YAML manifests stored in the clusters/my-cluster/namespaces directory.

YAML CODE START

apiVersion: v1

kind: Namespace

metadata:

name: staging

labels:

workspace: staging

YAML CODE END

2. Role-based access control (RBAC)

Security is a top priority in multi-environment setups. Specific service accounts (such as staging-flux and production-flux in the flux-system namespace) are defined, and RoleBindings are then used to grant these service accounts the necessary permissions – exclusively within their respective target namespaces.

For example, binding the production-flux account to cluster-admin in the production namespace. This ensures isolation. If you want to implement the principle of least privilege for service account permissions, you can also define custom roles. For this example, however, we will take the easy route.

3. Ingress-Nginx

The ingress controller is deployed using a HelmRelease (defined in clusters/my-cluster/infrastructure), which obtains the chart directly from its official Git repository. A specific service account (ingress-nginx-flux) is also defined for this deployment.

HelmChart as GitResource for Flux

To define a Helmchart source from a Git repository, you only need to specify the directory that contains the charts.

YAML CODE START

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: ingress-nginx-controller-source

namespace: flux-system

spec:

interval: 60m0s

ref:

branch: main

url: https://github.com/kubernetes/ingress-nginx

ignore: |

# exclude all

/*

# include charts directory only!

!/charts/

YAML CODE END

Helm chart as HelmRelease custom resource

It is important to specify the chart's directory in spec.chart.spec.chart within the HelmRelease custom resource so that Flux knows where to find the chart.

YAML CODE START

apiVersion: helm.toolkit.fluxcd.io/v2

kind: HelmRelease

metadata:

name: ingress-nginx

namespace: flux-system

spec:

serviceAccountName: ingress-nginx-flux

targetNamespace: ingress-nginx

storageNamespace: ingress-nginx

interval: 10m

chart:

spec:

chart: charts/ingress-nginx # path to the chart within the repo

version: 4.11.2

sourceRef:

kind: GitRepository

name: ingress-nginx-controller-source

namespace: flux-system

reconcileStrategy: Revision # good default for Git sources

install:

createNamespace: true

remediation:

retries: 3

upgrade:

remediation:

retries: 3

values:

controller:

replicaCount: 1

service:

type: LoadBalancer

fullnameOverride: ingress-nginx

YAML CODE END

Separation of application configuration and infrastructure

The separation between the clusters folder (infrastructure) and the apps folder (application configuration) is a key feature of our setup. This follows a standard GitOps structure, which is often found in ‘repo-per-team’ or monorepo approaches.

Cluster setup (clusters/my-cluster): This area defines the platform – namespaces, RBAC, shared services (such as ingress-nginx) and the basic Flux setup. This area is typically managed by a platform engineering or administration team.

Application development (apps): This directory is entirely dedicated to the workloads deployed on the platform.

For the Podinfo application, it contains the necessary GitRepository source definition and separate overlays for staging and production. These overlays make it possible to apply environment-specific configurations such as different ingress hosts (e.g. fluxv2infrademo.io vs. fluxv2infrademo.staging.io) without coupling the core manifests of the application. This separation of responsibilities allows different teams to manage their respective configuration areas independently.

The platform team ensures the stability of the cluster infrastructure, while the development teams can focus on iterating quickly in the apps directory. This simplifies change management and reduces the risk of unintentionally affecting cluster-wide resources.

Repository structure for applications

For our example, we use the Podinfo application, which is provided from a Git repository. We store it as a Flux source in the Flux namespace flux-system so that any Flux customisation in the same namespace can access it.

Note: This only works because we previously allowed cross-namespace-refs. Otherwise, each overlay would have to specify the source in its own namespace.

Podinfo Source in apps/sources/flux-system

YAML CODE START

apiVersion: source.toolkit.fluxcd.io/v1

kind: GitRepository

metadata:

name: podinfo

namespace: flux-system

spec:

interval: 1m0s

ref:

branch: master

url: https://github.com/stefanprodan/podinfo

YAML CODE END

Podinfo-CRD in apps/podinfo/overlays/(staging,production)

YAML CODE START

apiVersion: kustomize.toolkit.fluxcd.io/v1

kind: Kustomization

metadata:

name: podinfo-staging

namespace: flux-system

spec:

serviceAccountName: staging-flux

interval: 1m0s

path: ./kustomize

prune: true

retryInterval: 2m0s

sourceRef:

kind: GitRepository

name: podinfo

namespace: flux-system

targetNamespace: staging

timeout: 3m0s

wait: true

patches:

- patch: |-

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: podinfo

spec:

minReplicas: 2

target:

name: podinfo

kind: HorizontalPodAutoscaler

YAML CODE END

Standard Kubernetes Ingress in apps/podinfo/overlays/(staging,production)

YAML CODE START

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: podinfo-ingress

namespace: staging # Ensure this matches the namespace of the podinfo service

labels:

app.kubernetes.io/name: podinfo-ingress-staging

annotations:

kubernetes.io/ingress.class: "nginx"

spec:

ingressClassName: nginx

rules:

- host: fluxv2infrademo.staging.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: podinfo # Ensure this matches the podinfo service name

port:

number: 9898

YAML CODE END

Testing the setup

Now that the cluster has been configured, the Podinfo application deployed, and an ingress set up, you are almost ready to test the setup. However, before doing so, you must add the following entries to the /etc/hosts file (Windows: C:\windows\system32\drivers\etc\hosts) so that you can access the pods via fluxv2infrademo.staging.io and fluxv2infrademo.io.

SHELL SCRIPT START

## Add this to the hosts file

127.0.0.1 fluxv2infrademo.io

127.0.0.1 fluxv2infrademo.staging.io

SHELL SCRIPT END

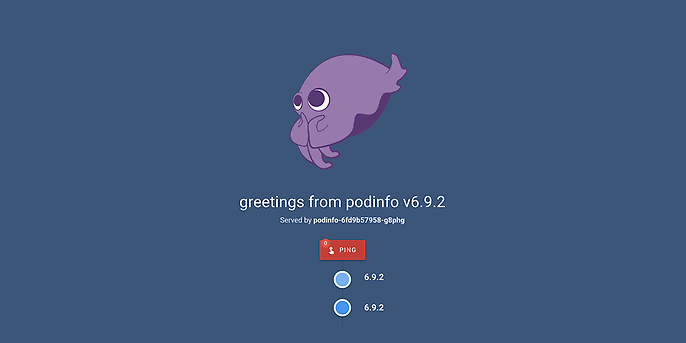

If you open fluxv2infrademo.staging.io in a browser, you will see the Podinfo status page.

Conclusion

By using Flux and introducing a structured repository approach, the management of infrastructure and applications across different environments is separated. This automation provides much-needed consistency and reliability in the continuous delivery pipeline. This is a decisive advantage, especially for banks, as they are subject to strict regulations and must ensure error-free provision of applications and infrastructure.

adesso supports your company with comprehensive Java expertise in the development and integration of modern Java portals that fit seamlessly into automated and scalable system landscapes. The result is a future-proof architecture that combines stability, performance and compliance.

We support you!

Would you like to implement continuous delivery in your Kubernetes environment securely, efficiently and in compliance with regulations? We support you in integrating GitOps principles into your DevOps processes with Flux CI – from architecture to implementation.