29. September 2023 By Sezen Ipek and Stefan Mönk

Agility meets data science: strategies with great potential in DS projects

What do artificial intelligence (AI), machine learning (ML), deep learning (DL) and data analytics/data analysis have to do with agility? This blog post explores that question. Agility and data science have both taken on a larger role in recent years. Using agile methods and frameworks can be a key factor in a company’s success. In this and future blog posts, I will be focusing primarily on the question of whether and how agile approaches could be integrated into the field of data science. But first off, we will give you a basic overview of what you need to know about data science and agility.

Data science and software development – where the differences lie

It is important not to conflate data science with software development. The latter involves the design of applications or systems that meet specific requirements. It is about programming code that runs and is maintained on different platforms. Conversely, data science focuses on analysing data to gain insights and model patterns, which requires an experimental approach since it is not possible to make assumptions about data and possible outcomes in advance. It is important to understand that software and machine learning models are deployed in different ways. There are obvious reasons for this, namely that software programmes are largely static whereas machine learning models are changing constantly and have to be taught on new data. When you really look at it, data science and data mining have more in common with R&D than with engineering. A prime example of this is CRISP-DM, which is focused on exploring new approaches rather than on software design. If you are now wondering what CRISP-DM is, we will explore this in the paragraphs to follow.

Terms used in data science and where the differences lie

Before we further explore the topic of agile software development in data science, it is important to first define the different terms. While artificial intelligence, machine learning, deep learning and data analytics/analysis are closely related, they each have their own distinct features and applications. So I will now take a quick look at each of these fields and explain how they should be understood within the context of data science.

Data science

Data science is an interdisciplinary field of applied science. The aim is to generate knowledge from data and use this to support decision-making processes or optimise business procedures. It also involves the scientific exploration of the process of creating, validating and transforming data in order to gain insights from it. Beyond that, data science draws on scientific principles to generate meaning from data. It employs machine learning and algorithms to extract and manage information from large data sets.

Under this definition, a data scientist uses scientific methods from the fields of mathematics, statistics, stochastics and computer science. In addition to generating knowledge from data, other objectives include creating recommendations for action, supporting the decision-making process and optimising as well as automating business processes. It is also used to produce forecasts and predictions for future events.

Artificial intelligence

The field of artificial intelligence (AI) is broad in scope and highly interdisciplinary. There are many different definitions for AI. One common feature of them all is that they define the term to mean the development of computer programs or systems whose behaviour can be described as ‘intelligent’.

The terms ‘data science’ and ‘AI’ are closely related. Data science is viewed as an interdisciplinary field of research that uses a variety of processes and methods to generate new knowledge from data, including data preparation, analysis, visualisation and forecasting. The main difference is that AI focuses on generating models, which can be used in data science projects to address specific issues.

Machine learning/deep learning

Machine learning and deep learning, which is a special type of machine learning, are AI-related disciplines. Machine learning refers to the artificial generation of knowledge from experience, most importantly from existing training data. It extrapolates patterns and describes them mathematically using a variety of supervised, unsupervised and reinforcement learning methods that make it possible to learn models as well as evaluate and handle complex systems. Natural language and image recognition are examples of this.

Data analytics/analysis

Data analytics is considered to be a subdomain of data science that involves the aggregation, storage, processing and analysis of data in order to gain strategic and business-related insights from it. The focus is on generating descriptive insights out of data. While there are methods and processes that data science and data analytics have in common, data science also employs other processes that data analytics does not.

Data analysis should be seen as a subdomain of data analytics involving the analysis of individual data sets in order to gain insights from them. The difference between data analytics and data analysis is reflected in their respective objectives. While data analysis seeks to gain insights from historical data as well as interpret and visualise these, data analytics is more focused on forecasting future events and making recommendations for action based on these forecasts.

What methods are used in data science?

Data science requires a systematic approach and a clearly defined process in which tasks are structured and there is a clean line of separation between the individual phases. This is necessary to provide a framework for data scientists and other stakeholders that they can use to structure data analytics tasks and address potential challenges. CRISP-DM and CRISP-ML(Q) are popular process models used in the data science field.

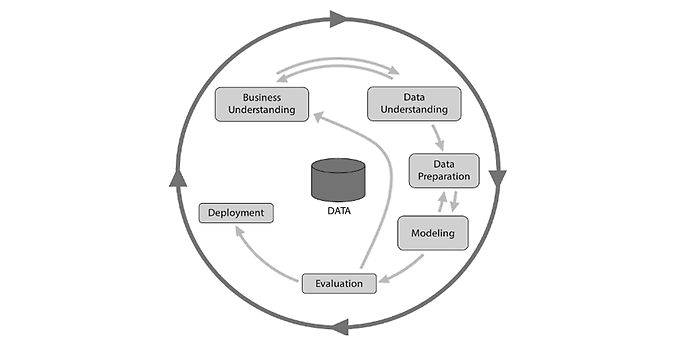

CRISP-DM-Phasen, Quelle Haneke et al., 2021: 9

CRISP-DM phases

The CRISP-DM approach, which is iterative and cyclical, is divided into six phases:

- Business understanding: During this phase, the aim is to understand the business, find out the current situation and identify the project goals.

- Data understanding: This phase focuses on identifying and understanding the data and the sources of data, and also involves carrying out an exploratory data analysis.

- Data preparation: This phase is critical as it allows you to prepare the data for use in training the models.

- Modelling: eDuring this phase, the model uses data mining algorithms such as classification, cluster or regression analyses to learn.

- Evaluation: The outputs generated by the model are evaluated during this phase.

- Deployment: This phase involves operationalising the results and monitoring the operational conditions.

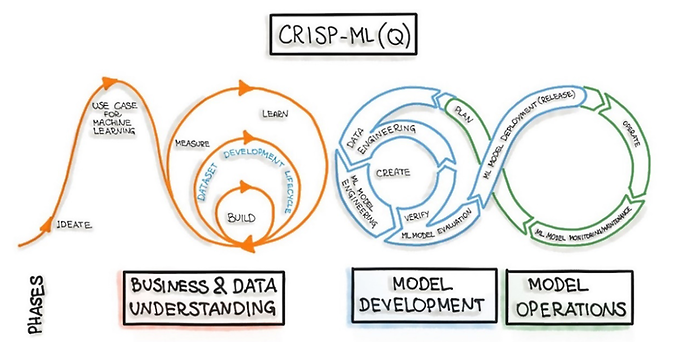

CRISP-DM(Q) (Cross-Industry Standard Process for Data Mining (Quality Assurance))

CRISP-ML(Q) could be viewed as an enhanced version of the CRISP-DM approach. It ensures that the principles of machine learning operations (MLOps) are observed and implemented. MLOps is an iterative process that is considered vital to the success of data science projects. It generates value and minimises potential risks in data science, AI and machine learning projects. The aim of MLOps is to standardise the life cycle of machine learning models. The CRISP-ML(Q) approach was designed for use in developing machine applications where a machine learning model is used. Like CRISP-DM, CRISP-ML(Q) is an iterative model consisting of several phases.

It is important to understand that software and machine learning models are deployed in different ways. There are obvious reasons for this, namely that software programmes are largely static whereas machine learning models are changing constantly and have to be taught on new data. The model environment is more complex and presumes the use of MLOps to assess and mitigate risks.

CRISP-ML(Q)

Outlook

Data science and agility are factors that drive success in today’s data-driven world. In this blog post, we covered the basics of data science as this relates to agility. It is important to understand that data science is different from standard software development and involves complex tasks with lots of experimentation. Along with that, we also defined AI, ML, data analytics and data analysis to ensure everyone is clear what each of the terms means. Process models such as CRISP-DM and CRISP-ML(Q) are a great tool because they allow us to take a systematic approach. But how do we deploy agile strategies in data science projects? Are there criteria that influence the decision on whether to use specific agile methods? And are we willing to do what it takes to be truly agile? I will answer these questions in my next blog post.

You can find more exciting topics from the adesso world in our blog articles published so far.