29. December 2023 By Azza Baatout

The path to traceability in AI projects

Why we strive for traceability: because things can go wrong

There are many ways that machine learning can fail in a production environment. And when it does fail, this can lead to inaccurate forecasts or distorted results. It is often difficult to recognise unexpected behaviour like this, especially if the business appears to be successful. In the event of an incident, traceability allows us to identify the cause of the problem and take fast action in response to it. We can easily identify which code version is responsible for the forecast and training as well as which data was used.

Step-by-step guide to developing a traceable data pipeline

By creating clear paths for data from the source to the final results, traceable pipelines put teams in a position where they are able to detect errors, identify the root causes of problems and ultimately improve the quality of the results. They provide a solid foundation for reproducible research and collaborative work. Beyond that, traceable data pipelines also allow users to efficiently manage changes to data or models.

DVC

We will be creating in this practical example a traceable data pipeline using DVC (Data Version Control), a free open-source tool for data management, the automation of ML pipelines and the management of experiments. It helps ML teams manage large data sets, track versions of models, data and pipelines, and in general make projects reproducible. Click here to find out more about DVC: https://dvc.org/

Setup

I use Python 3 for the configuration. I then add DVC to my environment (conda or poetry, depending on which one best suits my needs) and initialise it using the command ‘dvc init’.

After DVC has been initialised, a new directory called .dvc/ is created. This directory contains internal settings, cache files and directories that the user does not normally see. It is automatically added to the staging area using git add so that it can be easily committed with Git.

Step 1: data versioning

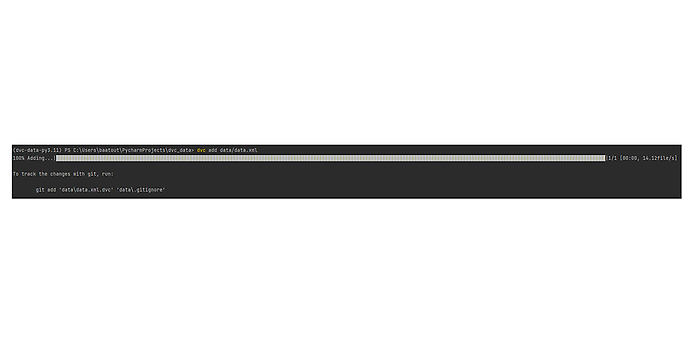

Using dvc add, we can easily monitor data sets, models or large files by specifying the targets to be monitored. DVC manages the corresponding *.dvc files and ensures data consistency in the workspace.

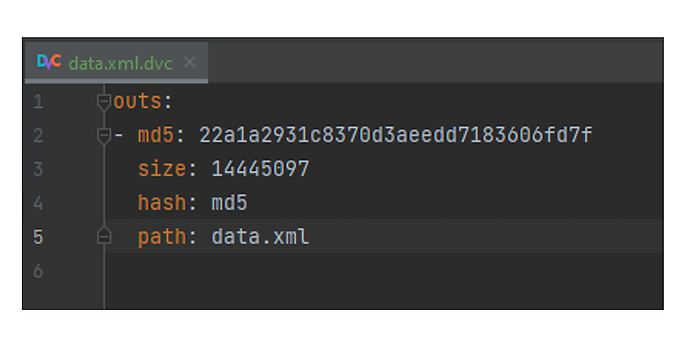

After executing the command, a data.xml.dvc file is created.

The MD5 value displayed in the image is the hash value of the file or directory that is being monitored by DVC. In this case, it is the hash value of the data.xml file, which contains the information needed to track the target data over time.

For new files and directories that are not yet tracked, DVC creates new *.dvc files to track the added data and stores them in the cache.

- DVC also supports two different storage types: remote storage via a cloud provider and self-hosted/local storage.

- Using

dvc pull, data artefacts created by colleagues can be downloaded without having to spend time and resources creating them again locally.

Conversely, dvc push can be used to upload the latest changes to remote or local storage systems.

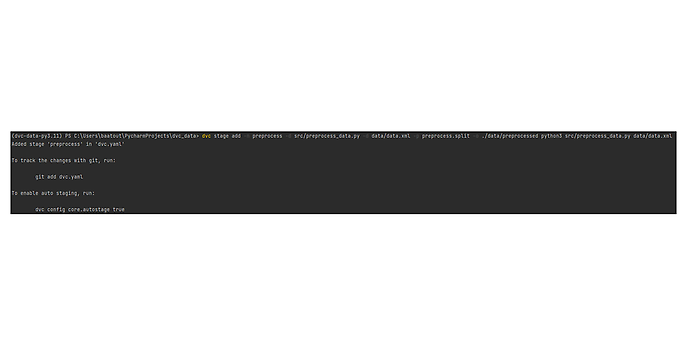

Step 2: defining the data pipeline

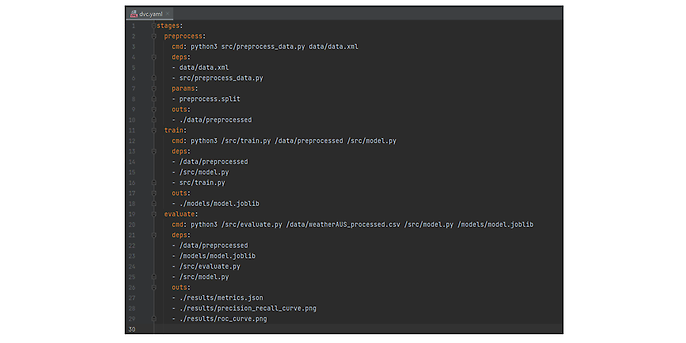

In this example, a DVC pipeline is created by defining three stages in the dvc.yaml file:

- The preprocess stage prepares the raw data for further processing.

- The train stage trains any model on the pre-processed data.

- The evaluate stage assesses performance based on the output of the model.

These stages are either defined manually in the ‘dvc.yaml’ file or created directly by entering dvc add stage in the command line.

The following rules must be observed:

- n: the name of the stage

- d: the dependencies of this stage

- o: the output of the results

- p uses defined parameters from the parameters.yaml file

A configuration file called dvc.yaml is created below, where the following is defined:

- cmd: the command line that is executed by the stage

- deps: the list of dependencies

- params: all parameters or hyperparameters that are imported from the

params.yamlfile - outs: the list of output files or output folders

Step 3: reproducing the data pipeline

I use the following command to reproduce the pipeline using DVC: dvc repro.

This reproduces the entire pipeline in the correct order according to the steps defined in dvc.yaml.

However, this step is skipped if no changes are found. If you want to run individual stages, add a stage name like you see here: dvc repro <stage-name>. This creates a status file called dvc.lock to record the results of the reproduction.

After running dvc repro, I use dvc push to transfer my changes to the remote repository. I use dvc pull if I want to download my data from the DVC repository again (similar to git pull).

Tip: we recommend that you commit dvc.lock immediately in order to save the current state and the results: git add dvc.lock && git commit -m "Save current reproduction state".

Using DVC in combination with workflow automation tools

We can ensure that changes to your data are consistent and reproducible by linking DVC with workflow automation tools such as GitHub Actions. Each step of the version control process can be automated and monitored in order to minimise errors and ensure traceability.

Outlook

DVC can be used to track data and models in a similar way to Git. In that sense, you could say it is Git for data. As well as that, it also helps us build a traceable machine learning pipeline and allows us to carry out experiments with our models and visualise the differences between them, which in turn improves traceability.

You will find more exciting topics from the world of adesso in our latest blog posts.

Also intersting: