26. February 2024 By Phuong Huong Nguyen and Veronika Tsishetska

Mass generation of machine-generated summaries with Aleph Alpha Luminous

The search for suitable or even relevant sources is very time-consuming in the context of editorial work. For these editorial activities, subject-specific knowledge databases are primarily used, which in turn contain a large number of entries. Summaries of the respective entries in a knowledge database make it easier for the searcher to assess whether an entry could be relevant. Summaries of each entry in a knowledge database are therefore necessary to make daily editorial work easier and more efficient.

In this blog post, we would like to pick up on the concept of previous posts in the adesso blog - for example on the topic of "Machine-generated summarisation of texts with Aleph Alpha Luminous via R, Part 2" - and report on a customer project that was implemented. In this context, text sizes were found that far exceeded the average length of the common "Summarisation Test Data Set: CNN/Daily Mail".

Knowledge sources are often distributed in PDF format. In order to generate a machine summary from this source format, PDF (or other formats such as image, video, etc.) must first be transformed into text. The text can then be fed into a language model such as Aleph Alpha Luminous to generate a summary. In the context of a professional activity that needs to make decisions based on summaries, it is essential that the summaries follow a similar scheme. The first part of this article deals with the pre-processing of texts, while the second part describes in detail the automatic generation of summaries from existing texts.

Description PDF2Text Parsing: The pre-processing of text for the task of generating summaries

In order to generate an exact summary from large language models (with reasonable effort), the prerequisite of textual input data for the language model must be created. The source data is often stored as PDF files. Therefore, the first step in our abstract generation pipeline is to parse the PDF files into text files. After the parsing process, the text usually still contains noise. These are unnecessary tokens that play no role in the correctness or content of the abstract, but would still cost money if they were processed in the language model. Therefore, text cleansing is an important step to remove the noise and prepare the data for the next step. The context of the data can play an important role in how we approach text cleansing. For example, website data requires different cleaning steps than reports (with fewer metadata standards) or journal articles (with more metadata standards such as DOI, ISBN, references, etc.). Since our data is different, we generally develop a text cleansing process that is optimised as much as possible for the expected majority of our data types. Another criterion for defining the text cleansing pipeline is the type of Natural Language Processing (NLP) task. For the summary generation task, we follow the text cleaning pipeline presented below. For other NLP tasks, however, a corresponding extension of the text cleaning pipeline can be considered.

In the context of generating summaries, sections that do not provide direct content information on the common topic do not play a role and are referred to as noise. If the text contains a lot of noise, this can lead to incorrectly generated summaries in LLM models. They are also calculated as tokens. Therefore, removing noise from the text input not only serves to increase the correctness of the generated summaries, but also to reduce costs.

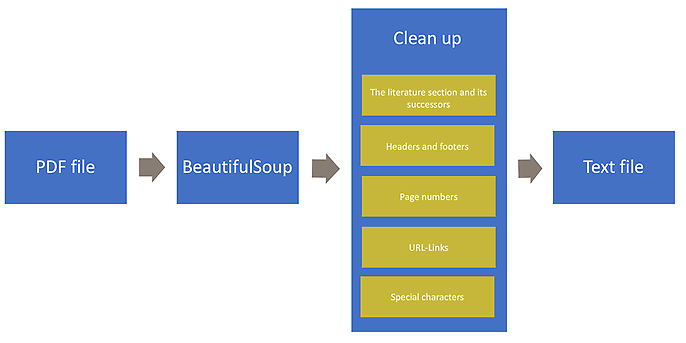

The most commonly used data types in our customer example are reports, journal articles and books. These data schemas typically have the property that they usually contain the literature section at the end and each page probably contains headers and footers. The literature section and its subsequent sections as well as headers and footers are considered noise. In addition, all special characters that have no meaning should be removed from the text input. The following figure shows our optimisation pipeline for parsing the PDF file into text.

To clean up the data, the PDF file is first converted into LXML format using the PDFMinerPDFasHTMLLoader and BeautifulSoup libraries. This intermediate conversion helps to localise important and unimportant information. Then the clean-up takes place. Step by step, we remove the literature section and its successors, headers and footers, page numbers, URL links and all special characters that are not contained in the list {A-Za-z0-9ÄÖÜäööüß.!?_$%&:;@,-}. The extracted text is then summarised using the Aleph Alpha Luminous model. A detailed description is provided in the next section.

Description of machine summarisation: The generation of guided summaries with Aleph Alpha

A summary is generated in two stages. Before the text is summarised in the first stage, it is first pre-processed. In order to create manageable text units, the text is broken down into segments from which sentences are formed with the minimum and maximum number of words. These sentences are then summarised into sections, known as chunks, and passed to the language model for processing in the first stage. Each chunk is converted into a concise summary. For this purpose, an Aleph-Alphchain is generated with the prompt specified below. Then "map_llm_chain" is applied to each chunk.

map_prompt_template = """### Instruction:

Firstly, give the following text a concise and informative title, focusing on capturing the central themes or key subjects present in the text. Then, summarize the text. Only extract this information as JSON.

### Input:

{{text}}

### Response:

{"title":"""

aa_model = AlephAlpha(

model=self.model[0],

maximum_tokens=self.maximum_tokens[0],

stop_sequences=["###"],

aleph_alpha_api_key=self.aleph_alpha_api_key[0],

presence_penalty = 0,

frequency_penalty = 0.5

)

map_llm_chain = LLMChain(llm=aa_model, prompt=map_prompt)

def process_chunk(chunk_text):

return map_llm_chain.apply([{"text": chunk_text}])

The result of the first stage of summarisation is small, manageable sections ("chunks"), a summary for each section ("chunk summaries") and a heading for each section ("chunk titles").

Before the results of the first stage are transferred to the second stage of summary generation, the chunks are categorised into groups ("topics"). This is done with the help of the Louvain Community Search Algorithm. The already grouped sections are grouped according to the communities found with the Louvain algorithm to create a more comprehensive and coherent summary. An Aleph Alpha Map-Reduce chain is used to generate a text summary from the linked sections. Specific prompts are used to generate additional summaries of different aspects of the text, such as aim, methodology, results and implications. For each aspect there is a "Map Prompt" and a "Combine Prompt" (see example below).

map_prompt_template = """Write a 75-100 word summary of the following text concentrating on the purpose of the described study/ research:

{text}

CONCISE SUMMARY:"""

### Instruction:

Please provide a clear, concise answer within 125 words to the following question. {{ question }} If there is no answer, say "NO_ANSWER_IN_TEXT".

### Input:

Text: {{ text }}

### Response:

A question on the respective aspect is inserted in the "combine prompt" above.

questions:

- What is the purpose, or intention of the study/research described in the following text? What was the study/research done for?

- How was the study/research described in the following text conducted regarding the design, methodology, techniques, or approach?

- What are the findings, outcomes, results, or discoveries of the study/ research described in the following text?

- What are the implications, suggestions, impacts, drawbacks or limitations stemming from the findings of the study/ research described in the following text without directly reiterating the findings?

The map-reducer chain with the appropriate combination of map and combine prompts depending on the aspect is applied to the linked sections. The concatenation of the results of each chain (the answers to all questions) leads to a final summary of the text.

map_llm = AlephAlpha(

model=self.model[0],

maximum_tokens=self.maximum_tokens[0],

stop_sequences=["###"],

aleph_alpha_api_key=self.aleph_alpha_api_key[0],

presence_penalty = 0,

frequency_penalty = 0.5

)

reduce_llm = AlephAlpha(

model=self.model[0],

maximum_tokens=self.maximum_tokens[0],

stop_sequences=["###"],

aleph_alpha_api_key=self.aleph_alpha_api_key[0],

presence_penalty = 0,

frequency_penalty = 0.5

)

output_purpose = chain({"input_documents": docs}, return_only_outputs=True)

purpose = output_purpose["output_text"]

output_method = chain2({"input_documents": docs}, return_only_outputs=True)

method = output_method["output_text"]

output_findings = chain3({"input_documents": docs}, return_only_outputs=True)

findings = output_findings["output_text"]

output_implications = chain4({"input_documents": docs}, return_only_outputs=True)

implications = output_implications["output_text"]

final_summary = "Purpose: " + str(purpose) + "\n" + "Method: " + str(method) + "\n" + "Findings: " + str(findings) + "\n" + "Implications: " + str(implications)

Headings of chunks that belong to the same community are also summarised with an Aleph Alpha Chain, so that there is only one heading for each group.

title_prompt_template = """Write an informative title that summarizes each of the following groups \ of titles. Make sure that the titles capture as much information as possible, and are different from each other:

{text}

Return your answer in a numbered list, with new line separating each title:

1. Title 1

2. Title 2

3. Title 3

TITLES:

"""

title_llm = AlephAlpha(

model=self.model[0],

maximum_tokens=self.maximum_tokens[0],

stop_sequences=["###"],

aleph_alpha_api_key=self.aleph_alpha_api_key[0],

presence_penalty = 0,

frequency_penalty = 0.5

)

title_llm_chain = LLMChain(llm=title_llm, prompt=title_prompt)

After the second stage of synthesis generation, intermediate results of the map-reduce chain are obtained. Two examples are shown below. The results are currently being further optimised in order to generate a stronger separation between method and results.

Source 1: Working Papers of the KVF NRW, No. 10

Original summary

Fast fashion is produced, worn and disposed of in a very short space of time. The aim of the study is to develop buyer clusters in the clothing sector and to inform and educate them. Consumers should be encouraged to adopt more responsible behaviour when buying, storing and recycling clothing.

Result of Aleph Alpha Luminous:

- Aim: The study was conducted to determine the motives and barriers to buying fast fashion and the actual barriers to sustainable fashion. The results of both components served as the basis for the development of a quantitative questionnaire to determine the motives for buying fast fashion and the actual barriers to unsustainable fashion.

- Method: The study/research was conducted using a mixed-methods approach. Qualitative interviews were conducted with consumers. The results were analysed using qualitative content analysis. The results of both components served as the basis for the development of a quantitative questionnaire to determine the motives for buying fast fashion and the actual barriers to unsustainable consumption. This study analyses the motives and barriers for buying fast fashion.

- Result: The results of the study show that primary and secondary school pupils learn how important it is to value clothing and how they can save resources by shopping sustainably. In secondary school, pupils should be educated about the environmental impact of their clothing purchases.

Source 2: Article of the MDPI

Original summary

The ethics of the fast fashion industry have been challenged by the emergence of new consumption paradigms such as anti-consumerism and sustainable consumption. The aim of this study is to investigate the conceptual structure of fast fashion avoidance beliefs that have led to anti-fast fashion consumption. Data were collected from female consumers aged between 20 and 39 years who have experience of buying fast fashion brands in Korea and Spain. The structure of avoidance beliefs was compared using a second-order factor analysis and the data was analysed using multiple regression. The structure of avoidance beliefs showed satisfactory validity and reliability in Korea, while deindividuation and strangeness were not included as negative beliefs in Spain. An analysis of the relationship between negative beliefs and anti-consumption showed that deindividuation and strangeness had a positive influence on anti-consumption of fast fashion in Korea. In Spain, poor performance and irresponsibility had a positive effect, while an overly fashionable style had a negative influence on the rejection of fast fashion. These findings contribute to the literature on the anti-consumption of fast fashion as part of the ethical clothing consumption movement. We can understand the anti-consumption of fast fashion by global consumers, diagnose the current status of fast fashion in the global market, and even suggest future directions for fast fashion retailers.

Aleph Alpha Luminous result

- Aim: The aim of this study is to analyse the anti-fast fashion consumption of global consumers. Specifically, the study has two objectives. First, this study attempts to recommend the conceptual structure of anti-fast fashion beliefs in Korea and Spain by using the conceptual structure proposed by Kim and colleagues. The results show that Korean consumers avoid fast fashion when they perceive fast fashion as strange and undifferentiated.

- Method: The study was based on an intercultural comparison between Spain and Korea. The study found that there are significant differences in cultural values, beliefs and behaviours between the two countries. However, there are also some similarities in terms of social norms, customs and behaviour. The study also showed that there are some differences in economic development and technology adoption between the two countries.

- Results: The study has shown that there are significant differences between the two countries in terms of cultural values, beliefs and behaviours. However, there are also some similarities in terms of social norms, customs and traditions. The study also showed that there are some differences in economic development and technology adoption between the two countries.

The study investigated the psychological dissidence and cultural distance caused by the damage to individual identity under the cultural pressure of fast fashion consumption.

Conclusion

The implementation of automatic summary generation for Utopia using Aleph Alpha Luminous enables the efficient processing and extraction of relevant information from a large number of PDF files. The process is divided into two main phases, with the first phase being preceded by the pre-processing of the text and the generation of "chunks".

Pre-processing begins with parsing PDF files into text formats, followed by comprehensive text cleansing. This step is crucial to remove noise and ensure that the summaries generated are accurate and relevant. Particular attention is paid to the removal of 'noise', such as bibliographic references, headers and footers and unimportant special characters.

In the first phase of abstract generation, the cleaned texts are divided into manageable "chunks" from which concise abstracts are generated. These "chunks" form the basis for the second phase, in which they are grouped thematically. The application of an Aleph Alpha Map Reducer chain enables the generation of comprehensive summaries of different aspects of the text using specific prompts for purpose, methodology, results and implications.

The result is a final summary of the text that is organised into clear sections and provides a comprehensive overview of various aspects of the content. The method used helps to facilitate the workflow by enabling the efficient processing of large amounts of knowledge data.

Would you like to find out more about exciting topics from the world of adesso? Then take a look at our previous blog posts.

Also interesting